This is What Makes Instagram Flag Your Photo as ‘Made With AI’

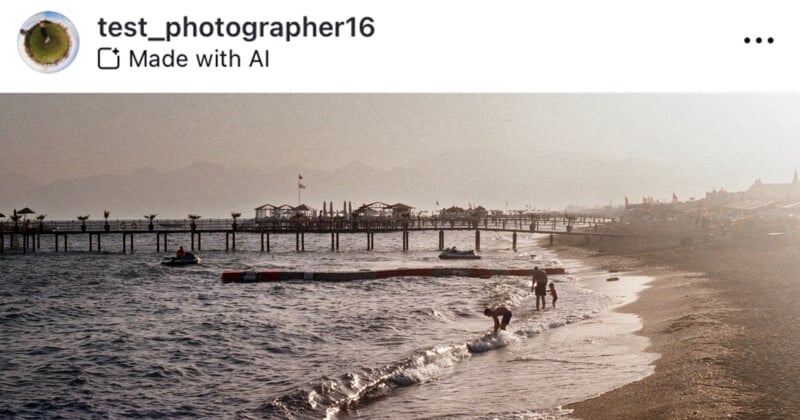

Recently, Instagram has been rolling out its “Made with AI” tag which ostensibly flags AI-generated images on the platform but it has been enraging photographers after flagging photos that are not AI-generated.

Update 6/25: This article has been updated with statements provided by Adobe concerning content credentials, early access generative AI features, and the company’s broader stance on content authenticity. The updated text is included at the end of the section titled ‘Generative Remove Tool.’

Instagram has revealed very little about how it detects AI content saying only it uses “industry-standard indicators.” With this in mind, PetaPixel tried to find all the specific ways the “Made with AI” sticker will be attached to an Instagram past.

Which Photoshop Tools Will Trigger the ‘Made With AI’ Label on Instagram?

Generative Fill

Perhaps one of the biggest bug-bearers for photographers is that making a minor adjustment to an image using an AI-powered tool on Photoshop results in the post being given a “Made with AI” label.

Just like in my previous tests, when removing a speck of dust on Photoshop using Adobe’s Generative Fill tool, Instagram gives the photo a “Made with AI” tag once it is uploaded. This happens despite the fact I could have achieved the exact same result with the Spot Healing Brush Tools or Content-Aware Fill which doesn’t trigger the AI label.

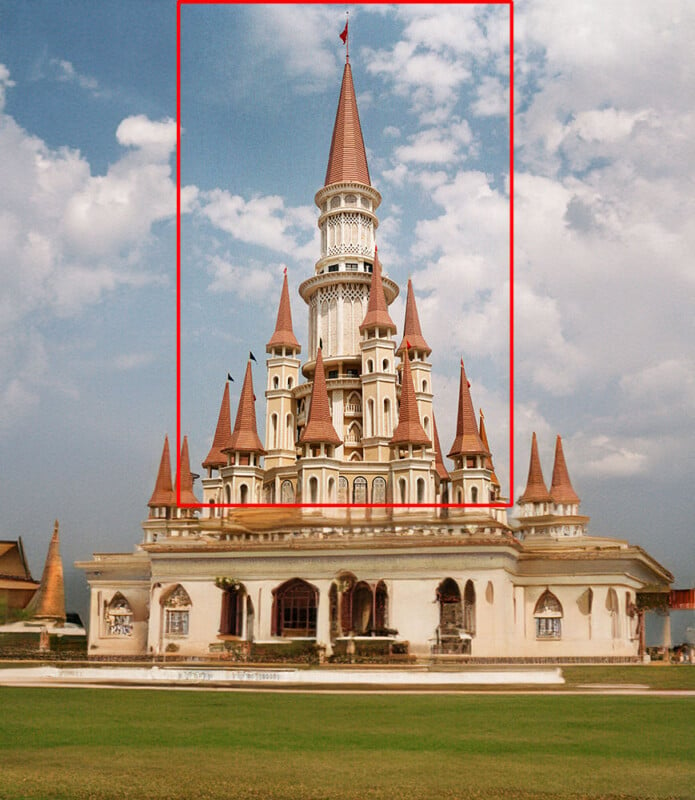

Of course, you can also make big alterations to a photo using Generative Fill just like below where I used the Reference Image feature which allows you to upload a reference photo to guide Generative Fill. That also triggers the marker.

Generative Expand

Generative Expand, a tool that appears as an option when cropping out from an image, is powered by the same AI technology as Generative Fill so it is perhaps no surprise that using this tool will also trigger a “Made with Label.”

Notable Exceptions in Adobe Lightroom and Photoshop

Generative Remove Tool

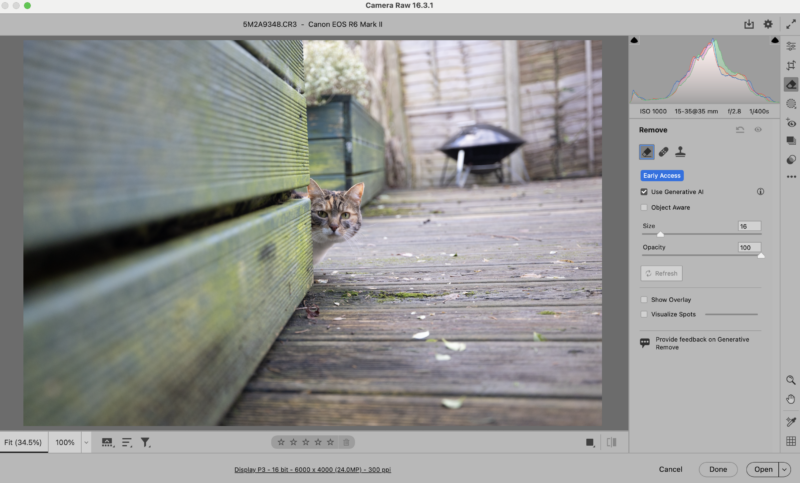

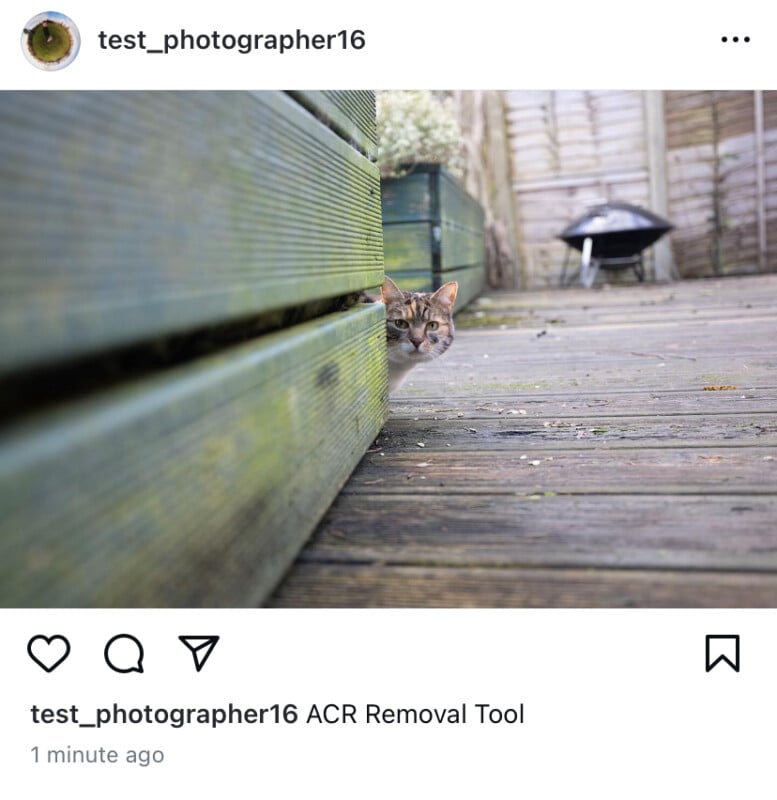

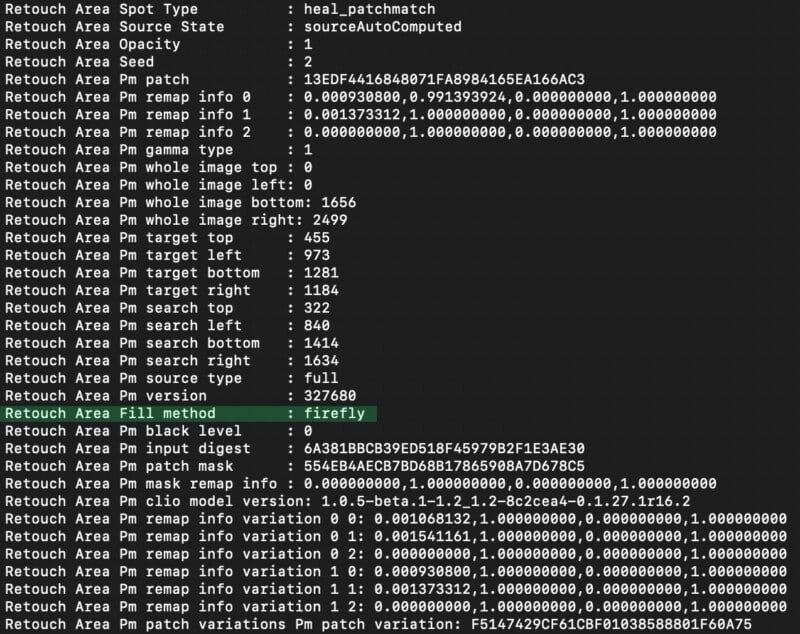

There is a new Generative Remove tool in Adobe Lightroom and Adobe Camera Raw (ACR), and despite it using generative AI technology, it does not trip Instagram’s “Made with AI” label.

Generative Remove is one of the newest and most impressive AI-powered features in Adobe Lightroom and Photoshop (via Adobe Camera Raw). Currently in early access (beta), Generative Remove uses Adobe Firefly technology to remove a brushed object from an image and replace it with new pixels that match the rest of the image.

While the results aren’t always much different than something like the Spot Healing tool, Generative Remove is much more reliable and sophisticated based on initial testing.

Despite using generative AI technology, an image edited with Generative Remove, as much a Firefly tool as any other, doesn’t get flagged as “Made with AI” in Instagram. Why?

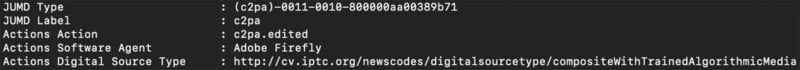

Unlike an image edited using Generative Fill, a photo edited with Generative Remove does not get tagged with any C2PA information. C2PA data is vital to Adobe’s Content Authenticity Initiative — a growing group that Meta is notably absent from — because it enables software tools to know when an image has been edited.

While there is a “Firefly” tag in a file edited in Lightroom using Generative Remove inside the “Retouch Area Fill method” field, a photo with Generative Fill applied has a lot more code in its metadata. In the JUMD Type and JUMD Label fields, there are C2PA flags, plus an explicit mention of “Adobe Firefly.” There are also C2PA claim generator flags and a C2PA signature in the metadata.

The inconsistencies underlie part of the issue with Meta’s “Made with AI” label. It is utterly slapdash in its execution and does nothing substantive to reduce the potential harm caused by AI-generated and edited photos.

As for Adobe’s stance, the company explains in an email response to PetaPixel: “Generative Remove is available in Early Access. When Generative Remove becomes generally available, Content Credentials will be automatically attached to photos edited with the feature in Lightroom. Like a ‘nutrition label’ for digital content, Content Credentials are tamper-evident metadata that can provide important information about how content was created, modified and published.”

The company did one better, going beyond PetaPixel‘s original question, with Andy Parsons, Senior Director of the Content Authenticity Initiative (CAI) at Adobe, providing the following statement:

Content Credentials are an open technical standard designed to provide important information like a ‘nutrition label’ for digital content such as the creator’s name, the date an image was created, what tools were used and any edits that were made, including if generative AI was used. At Adobe we are excited by the promise and potential of the integration of AI into creative workflows to transform how people imagine, ideate, and create. In a world where anything digital can be edited, we recognize how important it is for Content Credentials to carry the context to make clear how content was created and edited, including if the content was wholly generated by a generative AI model. The Content Credentials standard was designed from the ground up to clearly express this context.

Through our role leading the Content Authenticity Initiative (CAI) and co-founding the Coalition for Content Provenance and Authenticity, we understand how to best express how content has been edited is an evolving process. We know millions of users use AI today to perform the same aesthetic improvements to content as they did before AI. That’s why when it comes to labeling AI, we believe platforms labeling content as being made with or generated by AI should only do so when an image is wholly AI generated. That way, people will easily understand that the content they are viewing is entirely fake. If generative AI is only used in the editing process, the full context of Content Credentials should be viewable to provide deeper context into the authenticity, edits or underlying facts the creator may want to communicate.

Other Tools in Photoshop

In PetaPixel’s tests, the following tools also did not trigger Instagram’s AI label: Neural Filters, Sky Replacement, AI-powered noise reduction, and Super Resolution. It is worth noting that these features use Adobe Sensei AI technology, not Firefly. Then again, Meta’s tag isn’t “Made with Firefly,” it’s “Made with AI.”

Do AI-Generated Images Trigger the ‘Made With AI’ Label on Instagram?

DALL-E

OpenAI’s DALL-E image generator is a popular choice, and it will trigger Meta’s “Made with AI” label.

Adobe Firefly

Given how some Firefly-powered features result in Meta’s “Made with AI” tag appearing on Instagram, it should come as little surprise that an image outright generated using Firefly comes with an AI content warning.

Stable Diffusion

That said, not all AI image generators cause the “Made with AI” tag to appear. Users can create images in Stable Diffusion, export them from the platform, and upload them to Instagram without any concerns about an AI label.

Meta’s AI Image Generator

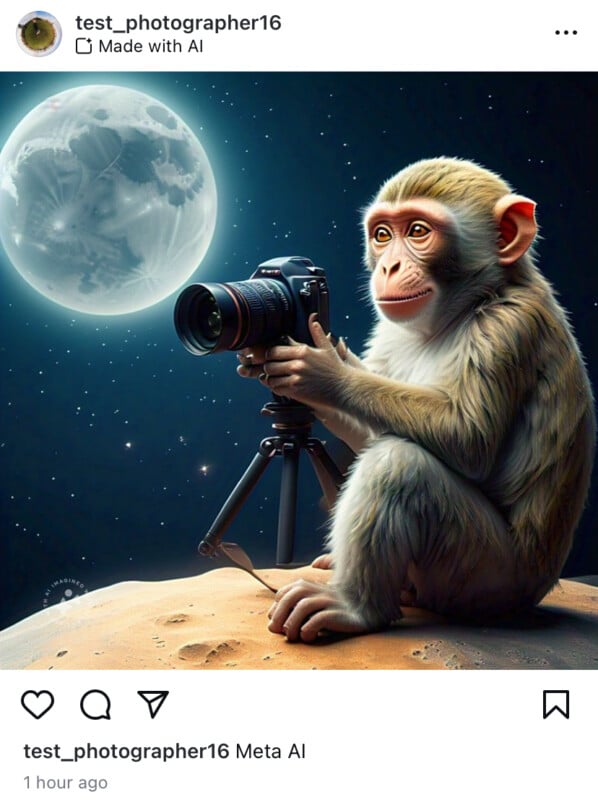

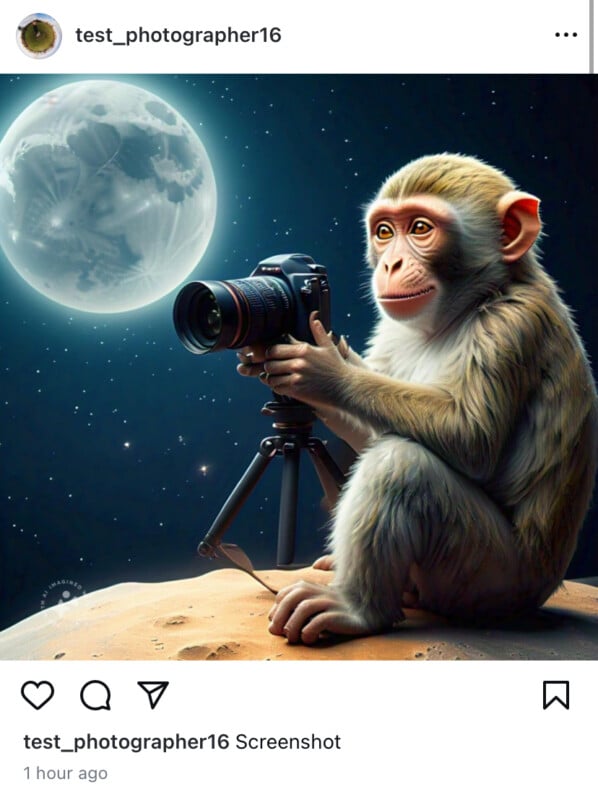

Instagram’s parent company Meta has its own AI image generator which we cheekily used to see if Meta will flag its own AI images.

And while it does flag, a simple screenshot can bypass the label — despite Meta’s AI watermark still being visible on the image itself.

What Does Instagram’s ‘Made with AI’ Label Actually Achieve?

Considering how easy it is to circumvent Meta’s AI-detection system — if you know how to edit photos, you can bypass Meta’s current checks — it begs the question, “What’s the point?” So far, Instagram’s “Made with AI” label has managed to incorrectly label some real, very-much-not-AI photos, sullying the reputation of respected photographers, and completely miss photos that were not only truly edited with AI, but not even done so in a way designed to camouflage the use of generative AI at all.

While the need for some sort of content authenticity is real, and growing ever more essential by the day, Meta’s approach is hamfisted. All indications point to a simple metadata scrape, which is not only easy to deceive, but unreliable depending on the software and individual tools a person uses to create an image.

Arguably, a C2PA-based approach makes a ton of sense, but Meta has it all backward. Instead of looking for evidence that an image has been edited using generative AI, perhaps Instagram should look for evidence that an image hasn’t been edited, and those images can get a label that verifies them as authentic. This type of technology already exists, and the Content Authenticity Initiative is hard at work developing ways to make it more accessible. If only Meta wanted to play ball.

Instead, photographers have to worry about whether their legitimate images will be mislabeled. There’s no doubt that in the age of AI, it is hard to trust what we see online. Even totally legitimate images have come under undue scrutiny, long before “Made with AI” labels started appearing on Instagram.

It’s a big problem that requires a thoughtful solution, and the current iteration of “Made with AI” labels on Instagram is definitely not it.

A Constantly Moving Target

We have endeavored to find every photo editing tool that will trigger a “Made with AI” label but there may be more and we will update this article if and when we find any. There is also significant likelihood that Meta’s seemingly rudimentary and basic AI-detection technology will undergo continual changes, meaning that some features that don’t currently trigger a “Made with AI” label may in the future.

Additional reporting by Jeremy Gray.